This missing piece in your data integration stack is costing you millions

Gottfried Sehringer

Chief Marketing Officer

Share to

Collecting and importing data files from customers, suppliers, partners, agents or other external sources is a necessary business process. It's also a process that many organizations struggle with. Some companies spend millions of dollars on manual workarounds and expensive development projects to cope with these challenges. Others live with the extra cost, security risk and negative revenue impact of poor data quality and availability.

But there's a better way…

Integration should be simple: ETL tools have been around for decades

There are, of course, robust ETL (Extract, Transform, Load) solutions available today, but they all rely on highly structured data and well-managed IT systems. They get tripped up as soon as companies need to receive and import data files that aren't well organized, like files that come from countless sources like end users, partners, suppliers, or customers and arrive in a range of file formats like CSV, XLS, PDF, or JSON.

These traditional systems ignore a piece of the puzzle that's become exponentially more important as our connected world has exploded with decentralized business IT and users worldwide across all types of devices.

The cost of the missing piece

Just because importing data files is an overlooked component of most data integration stacks doesn't mean companies aren't importing data.

Businesses import data constantly with the help of countless workarounds. Unfortunately, these workarounds introduce data quality issues and come at a high cost. The direct costs of personnel and manual work, supporting engineering resources and long-term maintenance are just the tip of the iceberg.

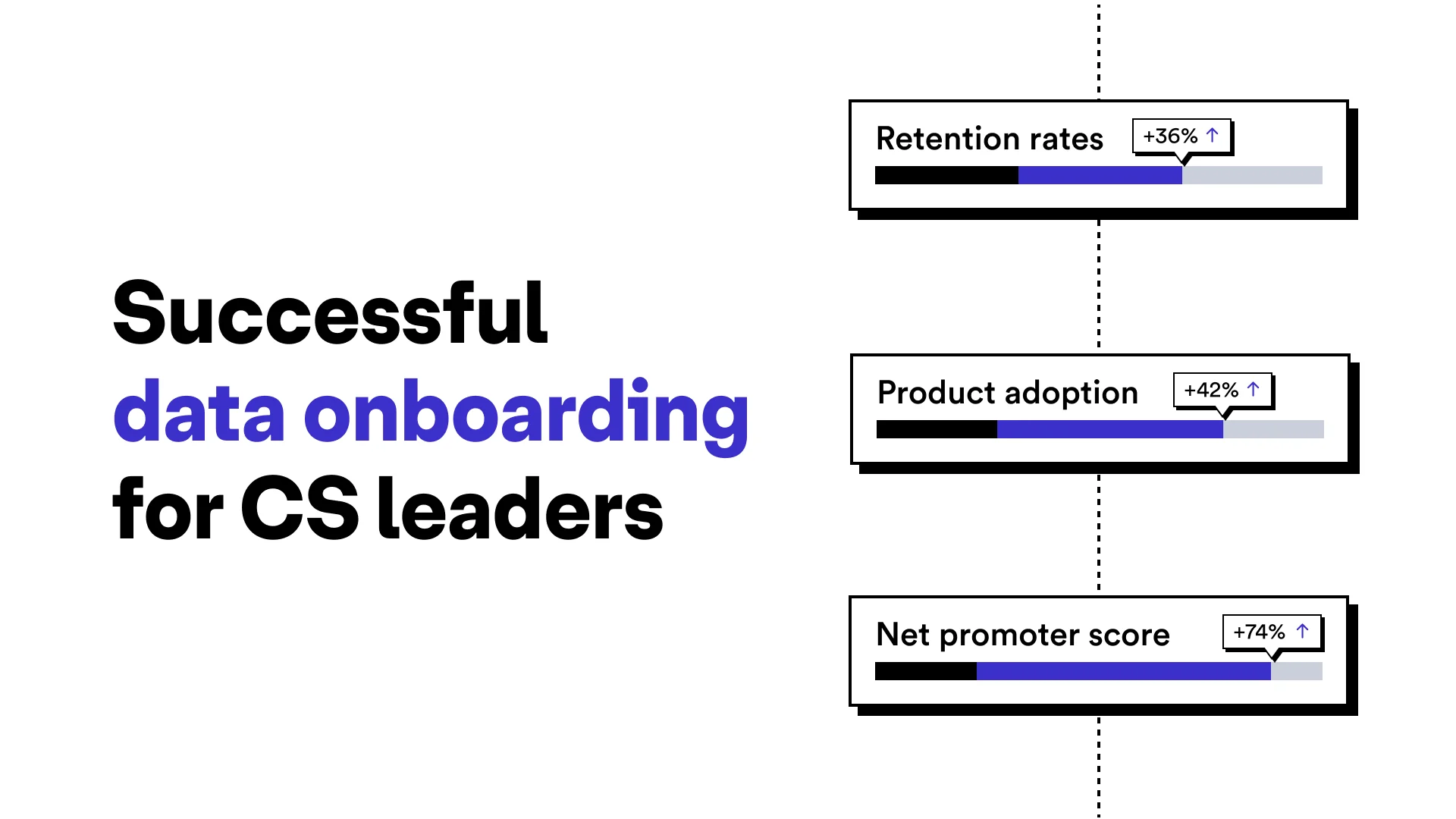

Any delay or complication in collecting, importing and onboarding data not only affects data quality and data cleaning costs but is also often the source of considerable user frustration.

Worse, these workarounds can lead to security and compliance issues and delay or prevent critical decision-making. The opportunity cost of lost and delayed revenue seriously increases the impact of data import challenges.

Considering all this, the lack of a solid data exchange solution can quickly add up to millions of dollars.

How to add the missing piece

How can businesses safely and efficiently collect, clean and import data that traditional tools can't easily handle?

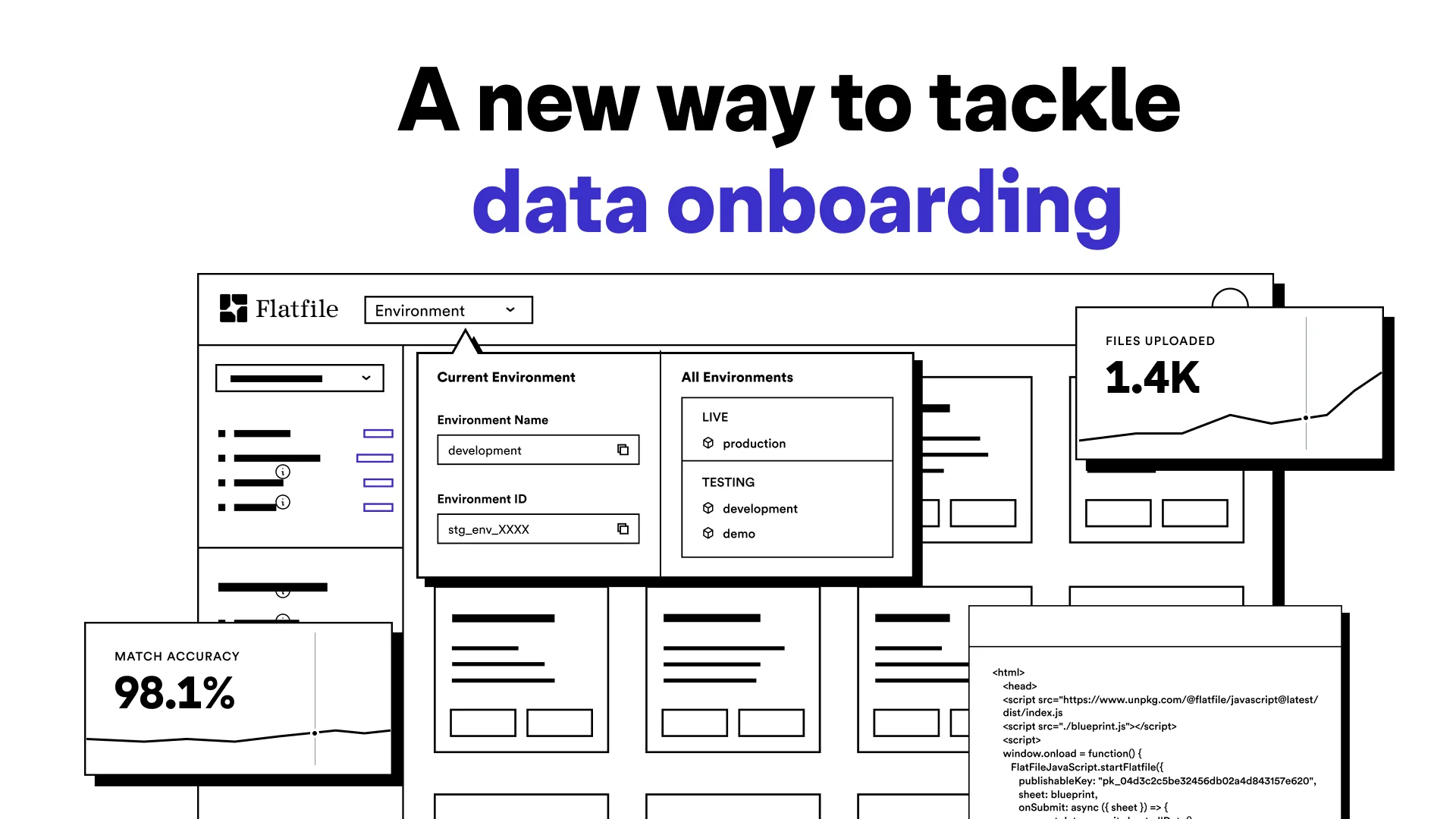

Companies need a solution that will help them import data files from many different sources in various file formats that might include end-user interactions and detailed workflows. A solution that can work independently or with traditional integration tools and uses advanced technology like AI to provide the speed, efficiency, and quality businesses need to make decisions accurately and quickly. That solution, generally referred to as "data import," "data collection," "data onboarding," or, more broadly, "data exchange," is the missing piece in most enterprise integration architectures.

Fortunately, that solution exists! Download this in-depth guide to find out how businesses of all sizes can safely and efficiently import a wide range of data file types from thousands or even millions of different sources.

The Introduction to Data Exchange: The Missing Piece in Enterprise Integration Architectures covers:

How common data file exchange challenges significantly impact businesses today

Key considerations for adding data file exchange to your integration architecture

Why and how ETL and data exchange complement each other

How AI can drastically improve data exchange processes (and when to be cautious)

How data exchange can help you maximize revenue opportunities, eliminate expensive workarounds, save resources, reduce data errors, accelerate decision-making and improve security and compliance

Data onboarding should be fast and painless

Our free in-depth guide will help you address data onboarding challenges and help new customers become customers for life.

Flatfile has helped hundreds of enterprise clients (and plenty of scaleups) tackle their data migration challenges, supporting just about any business or data requirement. Reach out and connect with one of our data experts to find out how Flatfile can help you address your data onboarding use case and requirements.